Throughout the 2010s, many people – including myself – treated it as a truism that manufacturing industries have faster productivity growth than service industries.

Historically that was true, and the reason wasn’t hard to grasp – machines improve faster than human beings do, so industries that depended on better machines naturally tended to advance faster than labor-intensive service industries.

But this particular piece of conventional wisdom stopped being true over a decade ago. In 2011, manufacturing productivity in the US hit a ceiling, and has actually declined in the years since:

Joey Politano has a good post where he breaks this productivity stagnation down by industry and shows that it holds true across industries in general. Here’s his key graph:

Importantly, this manufacturing slowdown isn’t mirrored by a general labor productivity slowdown across the economy! Service industries have been picking up the slack here, and keeping labor productivity growth going:

Service productivity rising faster than manufacturing productivity runs counter to many of the narratives you see in economics and policy debates. But it appears to be the reality for the last 13 years.

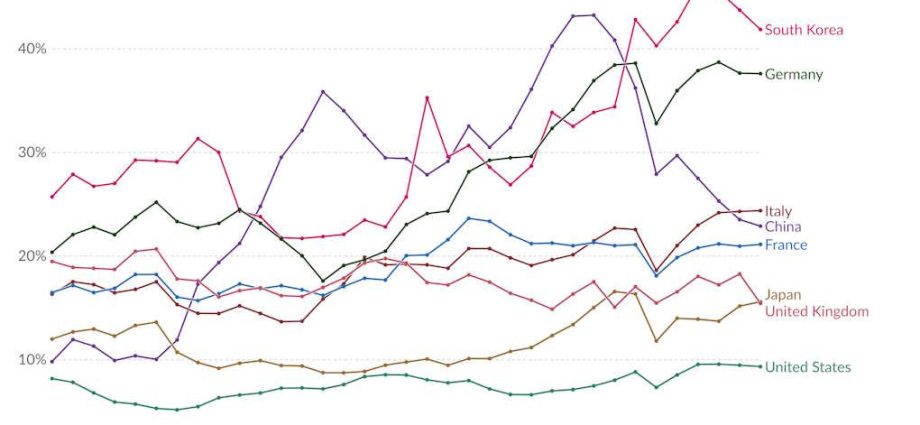

Americans seem to be waking up to the fact that something is wrong here. Greg Ip had a good chart showing that the stagnation in manufacturing productivity isn’t worldwide – the US and Japan have done uniquely badly since 2009:

A word of caution here: This data is cobbled together from various different sources. One or more of those sources might have major problems, and even if not, they might make different methodological choices that make them not directly comparable (for example, including subcontractors or not).

But the stagnation is so broadly distributed across manufacturing industries that it’s pretty clear something big is going on here. Anyone who wants to revive the US manufacturing sector, for national security purposes or otherwise, needs to worry about the possibility that something is going especially wrong with the American system.

Did the problems begin earlier?

In fact, the troubles might have begun well before 2011, and simply been masked by two other forces: 1) Moore’s Law, and 2) China.

You’ll notice in Politano’s chart that one sector dominated manufacturing productivity growth from 1987 to 2005 – “computer and electronics.” A landmark 2014 paper by Houseman et al. showed just how crucial this sector was to US manufacturing in that era:

Manufacturing output statistics mask divergent trends within the sector…Real value-added in the computer and electronic products industry, which includes computers, semiconductors, telecommunications equipment, and other electronic products manufacturing, grew at a staggering rate of 22% per year from 1997 to 2007…Real value-added declined in seven industries over the decade…[W]ithout the computer and electronic products industry, which accounted for just 10 to 13 percent of value-added throughout the decade, manufacturing output growth in the United States was relatively weak.

And almost all of the growth in that sector was due to quality improvements — the US wasn’t producing more computers and computer chips, but thanks to Moore’s Law, we were producing better ones:

The rapid growth of real value-added in the computer and electronic products industry…can be attributed to two subindustries: computer manufacturing…and semiconductor and related device manufacturing…The extraordinary real GDP growth in these subindustries, in turn, is a result of the adjustment…for improvements in quality.

Now, producing better stuff, instead of more stuff, is real productivity growth! Moore’s Law represents real improvement in our productive power. But the fact that quality improvement was the main driver of total US manufacturing output for much of the pre-2011 period means that other things may have been quietly going very wrong in terms of the US ability to manufacture large quantities of output.

And at the same time, there was another factor pumping up the US’ manufacturing productivity, especially in the 2010s: offshoring to China.

Until 2001, manufacturing output and manufacturing productivity went up in tandem in the US. Starting in 2001, productivity kept rising for a decade, while output flatlined:

The 2000s were the decade of the China Shock, when the US – along with many other rich countries – offshored a large amount of manufacturing work to the People’s Republic of China.

That raised measured manufacturing productivity in two ways. First, there’s a composition effect. Remember that in the 2000s, even as US manufacturing output per worker supposedly rose, the total number of manufacturing workers was falling off a cliff:

The most productive US manufacturers tended to stay competitive and survive this devastation, while less productive ones were driven out of business by Chinese competition. That composition effect will tend to raise measured productivity even if it doesn’t result in any increase in the productive power of American manufacturing overall.1

Second, offshoring to cheaper countries introduces biases in the data. Houseman et al. (2011) pointed out that when US manufacturers switch to suppliers in cheaper countries, the US government statistics often miss the switch, interpreting it as a rise in product quality rather than a drop in input cost.

That means that the manufacturing productivity benefits of offshoring to China in the 2000s were likely overstated. (Basically, Susan Houseman warned us, and we all should have been paying attention.)

So it’s very possible that serious structural problems in US manufacturing were brewing as early as the 90s but were covered up first by Moore’s Law and later by offshoring to China.

Anyway, let’s talk about some hypotheses as to why American manufacturing productivity flatlined.

Hypothesis 1: US manufacturers don’t buy enough machinery

One popular explanation for stagnating labor productivity in US manufacturing is low capital investment. Basically, a factory worker with machines is going to be more productive than a worker without machines. If you look at Chinese factories, they’re absolutely chock-full of machinery to help human workers do every task:

In the US, meanwhile, investment in this sort of capital equipment – and every other sort – has slumped in recent years. Capital intensiveness – basically, the amount of machines per worker – has increased more slowly since the 2009 recession:

Many observers point to this lack of investment as a factor behind slowing productivity. For example, Robert Atkinson of the Information Technology and Innovation Foundation, writes:

In 2021, China had installed 18% more robots per manufacturing worker than the United States. And when controlling for the fact that Chinese manufacturing wages were significantly lower than US wages, China had 12 times the rate of robot use in manufacturing than the United States…The United States had 274 robots per 10,000 workers, while China had 322.

And he posts the following chart:

Robots are only one small part of capital investment. But they may be an indicator of a more general failure of capital accumulation in US manufacturing. After 2004, although a drop in total factor productivity (TFP) growth was the biggest culprit, capital deepening (an increase in the amount of capital per worker) also made a much smaller contribution to overall US productivity growth than in the years prior:

I should note that Chad Syverson, an expert on productivity measurement, is skeptical of this factor; he points out that there’s no short-term relationship between capital deepening and labor productivity. But that still leaves room for a long-term relationship, since companies presumably take time to learn how to most effectively use the equipment they buy.

If US manufacturers aren’t buying enough machinery, what’s the reason? Poor business prospects, due to slowing technological improvements, Chinese competition, and/or slowing population growth are one obvious cause. If you don’t think your business can expand in the future, why buy machinery in the present?

Another possibility, raised by Robert Atkinson, is that US wages are too low:

[M]any [US] manufacturers can staff operations with a relatively low-paid workforce. This reduces their incentive to invest in raising productivity…[H]igher productivity enables firms to pay higher wages. Still, it’s also likely that the causation runs the other direction, with lower wages providing less motivation for raising productivity.

Of course, Chinese manufacturing wages are also low, but the government puts its thumb very heavily on the scale there, encouraging capital investment instead of letting China specialize in more labor-intensive products and production methods.

It’s also possible that the US financial system is broken when it comes to financing manufacturing. In other countries — China, Japan, Korea, Europe, etc. – manufacturers usually finance themselves with bank loans. In the US, they mostly borrow from markets – i.e., they issue bonds.

US banks don’t do much financing of manufacturing; instead, they concentrate mostly on financing home mortgages, and on various kinds of bond trading. Banks might provide a crucial source of expertise in making loans to manufacturing companies – expertise that bond investors might simply lack. Also, banks are an easy lever for governments like China’s to boost their manufacturing industries by insisting that loans be made at below-market rates.

Finally, American management might just be short-sighted. Perhaps stock-based compensation discourages long-term investment. Or perhaps all the good managers have gone to work in software, finance, and consulting.

So there are a bunch of sub-hypotheses of why capital investment might be low in the US manufacturing industry. But in any case, let’s turn to the next explanation: industry concentration.

Hypothesis 2: The sector is too concentrated

In general, US industry has been getting more concentrated since around the turn of the century. This has fed fears of monopoly power. But a lack of competition might also be making US manufacturing industries more torpid and complacent. Politano writes:

Using data on the dispersion of productivity across factories and other establishments, it also becomes clear that the gap between the most and least productive US manufacturers increased considerably since the turn of the millennium. This gap is most stark in previously high-productivity-growth sectors like electronics—a small subset of factories saw substantial (if slower) productivity gains through the 2000s and 2010s while most establishments saw stagnating or declining productivity…[A] small subset of companies remained at the technological frontier, where a much larger share fell behind.

And he posts the following chart:

Syverson also suggests this as one possible explanation:

The second explanation, proposed by Andrews, Criscuolo, and Gal (2015), is that a productivity growth rate gap has opened between frontier firms and their less efficient industry cohorts. Andrews et al. (2015) show that companies at the global productivity frontiers of their respective industries did not experience reductions in their average productivity growth rates throughout the 2000s.

However, most other firms in their industries did see decelerations. It appears that something has impeded the mechanisms that diffuse best technologies and practices through an industry.

Here’s the graph from Andrews et al. (2015):

Now, it’s notable that the divergence is worse for service industries here, even though service-industry productivity in the US has kept on growing.

Also, that chart is for the entire OECD. Politano’s graph shows that the dispersion in US computer and electronics manufacturing productivity mostly happened in the 2000s and leveled off after 2010.

Data from Akcigit and Ates (2019) shows the productivity dispersion for overall US manufacturing mostly happening in the late 90s. So the timing doesn’t really seem to line up perfectly with the observed productivity slowdown since 2011.

But anyway, this could be one thing contributing to manufacturing’s slowdown.

Hypothesis 3: The US doesn’t export enough

One possibility that I haven’t seen anyone talk about, but which seems like a pretty obvious hypothesis, is that US manufacturers don’t export very much.

Industrial policy enthusiasts tend to be fans of the idea of “export discipline.” This is based on the theory that competing in export markets, instead of staying within the safer and less competitive domestic market, forces companies to adopt international best practices, while also offering them opportunities to develop new markets, invent new products, and absorb foreign technologies.

Manufactured goods figure prominently among the US’ export mix. But compared to other advanced countries, US exports just aren’t very significant as a percentage of its total economy:

A lot of this is just that the US is really, really big. The bigger a company’s domestic market, the less incentive there is to go looking for customers abroad. China is big too, but its government puts its thumb very heavily on the scale in favor of exports. If you’re a manufacturer in Pennsylvania, why bother selling to Korea when you can sell to Florida?

America’s meager exports are exacerbated by its possession of the global reserve currency, which increases demand for the dollar and thus makes US exports uncompetitive. But even if exports rose so much that the entire trade deficit vanished, that would leave the US still behind Japan and the UK in terms of exports as a percent of GDP – and far behind China, France, Germany, etc.

If lots of American manufacturers are ignoring export markets, either voluntarily or due to macroeconomic factors beyond their control, that will also tend to weaken investment. The smaller your expected customer base, the less machinery you need to buy.

Hypothesis 4: The end of the rainbow

So far, all of these hypotheses have come with policy prescriptions attached. The US can incentivize its manufacturing businesses to invest more. It can encourage the circulation of workers between manufacturing companies, in order to diffuse know-how and innovation from frontier firms to lagging firms. And it can subsidize exports in various ways.

There are other hypotheses I didn’t list above2, such as bottlenecks in the manufacturing ecosystem and overregulation of land use, both of which also come with policy solutions attached. But there’s one more common explanation out there that’s much more pessimistic than the others, because it implies there’s very little to be done about the manufacturing productivity slowdown – at least, in the short term.

This is the hypothesis that manufacturing productivity growth depended on a set of key innovations – steam power, chemistry, combustion engines, electricity, computerization, and perhaps one or two others – that have now been mostly fully exploited.

Syverson suggests this possibility:

One is that the “easy wins” among information-technology-sourced TFP gains have largely been won, and producers have entered a period of diminished returns from these technologies. There is considerable evidence that information technologies (IT) were a key force behind the productivity acceleration of 1995-2004 (e.g., Jorgenson, Ho, and Stiroh, 2008). More recent work like Fernald (2015) and Byrne, Oliner, and Sichel (2015) have presented evidence that these IT-based gains have slowed over the past decade, however.

And The Economist sums this idea up succinctly, writing that “low-hanging fruit might have been plucked more eagerly in manufacturing.”

Why did manufacturing traditionally have such rapid productivity growth? One reason is that manufacturing technology is embodied — whereas improvements in services typically require imparting new knowledge to humans or changing up human organization, in manufacturing you can buy a new machine. This makes it easy to spread productivity-improving technologies. It also increases the demand for innovation, because machines are easier to sell at scale than business processes.

Another reason is that manufacturing is very modular, and thus lends itself to constant rearrangements of the production process. My favorite explanation is how electricity supercharged manufacturing productivity in the early 20th century not by offering cheaper energy, but by enabling the rearrangement of factory floors into a bunch of little independent workstations.

It’s conceivable that both of those processes are now reaching the end of the rainbow that began in the Industrial Revolution. It’s possible that factory floors have been optimized, machine tools installed, and production processes computerized.

There’s no guarantee that those techniques will be able to keep boosting manufacturing productivity at historic rates forever. In fact, manufacturing R&D in the US has grown in real terms since 1990, at an accelerating (linear) rate. But it’s not doing much to boost productivity.

Of course, even if that low-hanging fruit has been picked, manufacturing productivity can still improve. It can still get cheaper energy – which thanks to solar, may now become a reality.

And it can get better inputs – better materials, more high-performing computer chips, and so on. But if the problem of how to set up a factory has been mostly solved, it might put a big damper on overall manufacturing productivity growth – even if AI makes some marginal improvements.

OK but if that’s the case, how come other countries – Germany, Korea, France, etc. – have managed to increase their manufacturing productivity over the past 13 years?

One possibility is that they’re just catching up to the US and Japan, which were the leaders in manufacturing productivity back in the early 1990s. I can’t find a great data set comparing absolute levels of manufacturing productivity across countries over time, but I’ll keep looking.

Another important thing to remember is that we’ve been talking about labor productivity. If manufacturers buy more machinery, labor productivity will go up, because each human can do more. But machinery isn’t free — creating it and upkeeping it comes at a cost. And it’s possible to buy too much of it.

(If you don’t believe me, imagine buying 20 machine tools for each human being in the labor force; they wouldn’t be able to operate it all! That’s an extreme example, but you see the principle.)

It’s possible that all those vast fields of machine tools in the China factory videos are something the US should emulate. But it’s also possible that they’re wasteful — that China has over-automated, and that its economy is going to be held back by paying the upkeep and obsolescence costs on a bunch of machinery that made only marginal improvements in labor productivity.

The way to test this would be to look at total factor productivity. TFP measures the combined productivity of labor and capital (or at least, it tries to). If you pump up labor productivity past the optimal point by buying too many machines, your capital productivity should go down, and your TFP will remain unchanged.

And when we look at the TFP for advanced economies, we see that it has flatlined for every single country on Greg Ip’s chart from above, since right around…2010 or 2011.

China’s TFP has even gone down in recent years.

Now, there are some big caveats here. First of all, TFP is hard to measure, and I don’t entirely trust these numbers. Second, this is TFP for the whole economy, including services, manufacturing, and agriculture – not just for manufacturing alone. So this is very far from definitive proof that humanity has reached the end of the Industrial Revolution rainbow. But it’s at least one hypothesis we should look into.

Anyway, the question of why American manufacturing productivity has stagnated is a very important open question. More than just a few percentage points of economic growth hang in the balance here — defense and defense-related manufacturing will be one of the keys to victory in Cold War 2, and the US has a lot of catching up to do in that regard. So we had better get started chasing down some of these hypotheses — and any other plausible ones we can think of.

1 Note: This may be one reason US and Japanese manufacturing productivity grew more slowly than others in the 2010s. US offshoring to China slowed considerably after the Great Recession, while Japanese offshoring to China was always limited by the political troubles between the two countries.

So some of the relatively faster manufacturing productivity growth of Taiwan, France, etc. in the 2010s might be because they kept going full speed ahead with offshoring to China. This could be a combination of “real” productivity growth (specialization), composition effects, and statistical artifacts similar to the one Houseman et al. (2011) document for the US.

2 Note: I talk about overregulation of land use and bottlenecks in the supply chain so much that I didn’t feel the need to go over them again. Both also have significant weaknesses as overarching explanations for the manufacturing productivity slowdown – in particular, the timing is way off for both. But in any case, my list of hypotheses is not an exhaustive one.

This article was first published on Noah Smith’s Noahpinion Substack and is republished with kind permission. Read the original here and become a Noahopinion subscriber here.