Blake Lemoine, a Google engineer, made headlines in June 2022 when he asserted that the company’s LaMDA robot had attained intelligence. Lemoine claimed that the software had the verbal skills of a young seven-year-old, and we should assume that it shared this worldview.

A” large language model”( LLM ) of the same kind that powers OpenAI’s ChatGPT bot powers LaMDA, which was later made available to the general public as Bard. Similar technology is being rushed to be implemented by another large tech firms.

Now that hundreds of millions of people have the opportunity to play with LLMs, some seem to think they are aware of it. Instead, they are” stochastic parrots,” to use the poetic phrase of scholar and data scientist Emily Bender, which chatter convincingly but without understanding. But what about the following generation of artificial intelligence ( AI ) systems, as well as the one after that?

In order to compile a list of fundamental mathematical properties that any potentially informed system would probably need to have, our team of philosophers, neuroscientists and computer scientists looked to existing scientific theories of how animal consciousness functions. No present system, in our opinion, even approaches the threshold of consciousness, but there is no reason why upcoming systems won’t eventually become absolutely aware.

locating measures

Since the creation of the” Imitation Game” by the computer pioneer Alan Turing in 1950, the capacity to successfully pose a man in discussion has frequently been regarded as an accurate indicator of consciousness. This is typically due to the task’s apparent difficulty and the need for awareness.

However, the verbal fluency of LLMs does just move the goalposts, as with the 1997 beat of master Gary Kasparov by the chess computer Deep Blue. Is there a ethical way to approach the issue of AI consciousness that doesn’t depend on our gut feelings about what makes human cognition unique or challenging?

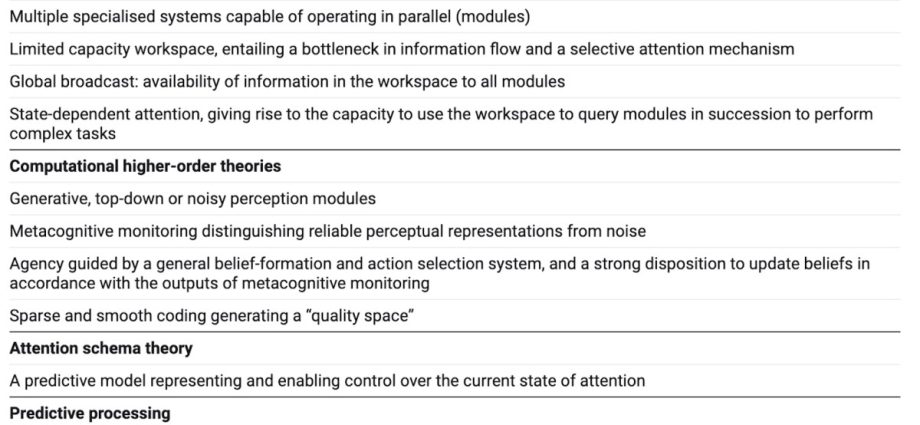

Our most recent light report aims to accomplish this. To create a list of” indicator properties” that could be used with AI systems, we contrasted current scientific theories of what causes people to become conscious.

Although we don’t believe systems that have the indicator properties are unquestionably informed, the more indicators there are for a system to be aware of AI, we should take those claims more seriously.

the neural underpinnings of perception

What kind of signals were we trying to find? We shied away from open behavioral standards, such as the ability to have conversations with others, because they are both human-centric and simple to fake.

Instead, we examined theories regarding the mathematical operations that underpin perception in the human brain. These can provide information about the information processing required to support personal experience.

For instance,” global workspace theories” hypothesize that consciousness results from the existence of a capacity-restricted barrier that gathers data from all brain regions and chooses it to make available to the entire world. The position of feedback from early procedures to earlier ones is emphasized by” repeated running theories.”

More precise indicators are suggested by each idea in turn. 14 indicators are included in our last list, each of which focuses on a different aspect of how techniques function as opposed to how they behave.

There is no reason to believe that current devices are aware.

How do the latest technology pile up? According to our research, there is no reason to believe that modern AI systems are aware.

Some do satisfy a few of the requirements. Three of the” world workspace” indicators are met by devices using the transformer architecture, a type of machine-learning model behind ChatGPT and comparable tools, but they lack the essential capability for global rebroadcasting. Most of the other measures are even unsatisfied by them.

Therefore, despite ChatGPT’s amazing conversational skills, no one is likely at home in. Similar to other designs, they only meet a few requirements.

At most, the majority of modern designs just adhere to a few of the indicators. However, at least one recent structures satisfies it for the majority of the signals.

This implies that there are no overt, fundamental technological obstacles to creating AI systems that meet all or the majority of the signals.

It is most likely a matter of when, not if, some such technique is created. Of course, when that occurs, there will still be a lot of issues.

Beyond the comprehension of humans

The scientific principles we discuss( as well as the paper’s writers )! don’t generally concur with one another. Instead of using stringent standards to recognize that fact, we used a list of signals. In the face of technological uncertainty, this approach can be effective.

Related discussions about animal consciousness served as our sources of inspiration. Despite the fact that they are unable to communicate with us about their emotions, the majority of us believe that at least some human creatures are conscious.

The London School of Economics’ record from 2021, which claimed that cephalopods like octopuses probably experience pain, played a significant role in altering UK pet ethics policy. The unexpected result of concentrating on structural characteristics is that even some basic animals, like insects, may even have a minimum level of consciousness.

There are no advice for how to use conscious AI in our statement. As AI techniques invariably gain in strength and are used more frequently, this topic will become more urgent.

Our measures won’t be the final syllable, but we hope they will serve as a primary step in addressing this difficult problem in an informed manner.

Professor Colin Klein teaches beliefs at the Australian National University.

Under a Creative Commons license, this post has been republished from The Conversation. Read the article in its entirety.