Much has been said and written about how artificial intelligence will revolutionize the world as we know it, from the way we learn and work to how we traverse the globe and beyond. But concern is growing over the use of AI in warfare, which, alongside climate change, could lead to devastating consequences for humanity.

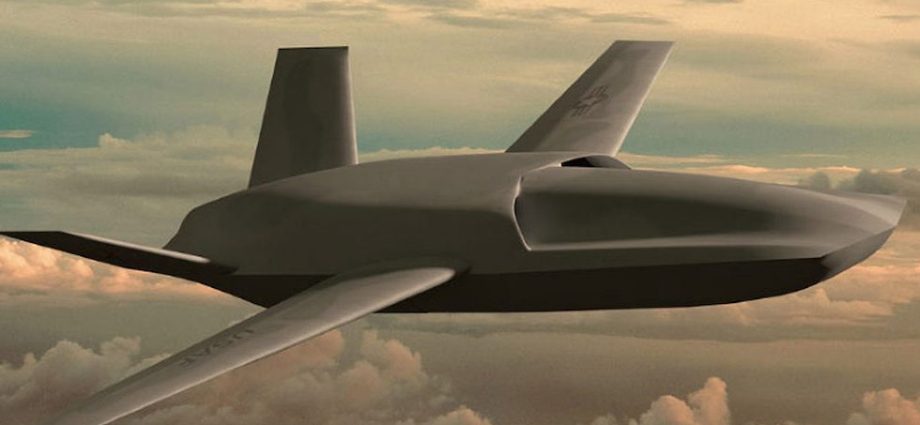

AI already has combat experience. In March 2020, a Turkish-made drone used facial recognition to engage enemy combatants in Libya. Three years on, there is still a lack of regulation about the advanced weapons that seem to have come straight from the pages of a dystopian science-fiction novel.

Questions worth asking stem from the near impossibility of ensuring that autonomous weapons adhere to the principles of international humanitarian law. Can we trust autonomous weapons to be able to distinguish between civilians and combatants? Will autonomous weapons be able to minimize harm to civilians? Without human intervention, will it pull back in situations where emotional judgment is critical?

In the absence of human control, it will be difficult to fix accountability for war crimes. Morally, allowing machines to make decisions about killing humans, by reducing people to data, can be a form of digital dehumanization. Strategically, the proliferation of AI weapons technology will make it easier for countries to introduce AI weapons.

But most important, by reducing casualties, an increased deployment of AI weapons will lower the thresholds for countries to decide if they should go to war.

Alongside the increasing use of AI weapons technology, if access and costs were to be significantly lowered so non-state actors could include them in their arsenals, this could have catastrophic implications. With their provenance difficult to prove, non-state actors could use AI weapons to wreak havoc while maintaining deniability.

Vladimir Putin’s infamous 2017 statement on AI, where he reportedly said “the one who becomes the leader in this sphere will be the ruler of the world,” rings more true now than ever.

There now is a silent and growing AI arms race. This year, the Pentagon requested $145 billion from the US Congress just for one fiscal year to boost spending on critical technology and strengthening collaboration with the private sector.

In addition to calling for “building bridges with America’s dynamic innovation ecosystem,” the request called for AI funding.

Last December, the Pentagon established the Office of Strategic Capital (OSC) to incentivize private-sector investment in military-use technologies. The office also solicits ideas from the private sector on next-generation technologies, one of which it describes as “trusted AI and autonomy,” without specifying what “trusted AI” means.

The China factor

Nonetheless, one hopes that this semantic shift is reflective of serious consideration being given within the American military-industrial complex to ethical and legal issues surrounding AI in warfare. Or it could be linked to US-China competition.

China’s policy of Military-Civilian Fusion (MCF) is similar to the American strategy. The US has had a long head start, while China’s MCF first emerged in the late 1990s. However, it has increasingly aimed to mirror America’s military-industrial complex only since Xi Jinping came to power.

Just like the OSC, MCF policy aims to pursue leadership in AI. One of its recommended policy tools would sit just as well in the US; the MCF aims to establish venture capital funds to encourage civilian innovation in AI.

Concerns about AI proliferation aside, no country on the planet can make a convincing case to become the sole arbiter of AI standards in warfare. Neither is a global AI weapons ban feasible. Given this, there needs to be urgent international focus on the need for global minimum standards on the use of AI in warfare. This should also include a discussion on how AI will increasingly transform warfare itself.

In March, a US government official spoke about using large language models (LLMs) in information warfare. While ChatGPT has been pilloried (and sued) for “hallucinating,” or generating fake information, more sophisticated LLMs could be deployed by countries to generate hallucinations against enemies.

A military-grade LLM could be used to turbocharge fake news, create deepfakes, increase phishing attacks and even subvert a country’s entire information ecosystem. It is instructive that the US Defense Department official referred to ChatGPT as the “talk of the town.”

In May, leading American AI firms, including ChatGPT, asked Congress to regulate AI. Even if standards were established, it would be difficult to determine in situations where it becomes hard to discern the boundary between the state and the private sector, whether due to a country’s choice (China), weak institutions (Russia), or through a carefully nurtured symbiosis (US).

In Israel, successful tech firms that specialize in dual-use military-civilian technology have been birthed by retired military officials. The Israeli military has acknowledged using an AI tool to select targets for air strikes. Although its AI military tools are reportedly under human supervision, they are not subject to regulation by any institution, just like most countries.

It then becomes crucial to look at every AI-using country’s domestic governing system – does it have, or want to have, institutions and laws that regulate AI effectively?

Unlike regulating nuclear-weapons proliferation, the world has made very little progress on the AI arms race. This is likely due to the fast pace of innovation going on in the AI space. The window of opportunity, then, for regulating AI is fast shrinking. Will the global community keep pace?

This article was provided by Syndication Bureau, which holds copyright.