It’s become fashionable to think of artificial intelligence (AI) as an inherently dehumanizing technology, a ruthless force of automation that has unleashed legions of virtual skilled laborers in faceless form.

But what if AI turns out to be the one tool able to identify what makes your ideas special, recognizing your unique perspective and potential on the issues where it matters most?

You’d be forgiven if you’re distraught about society’s ability to grapple with this new technology. So far, there’s no lack of prognostications about the democratic doom that AI may wreak on the US system of government.

There are legitimate reasons to be concerned that AI could spread misinformation, break public comment processes on regulations, inundate legislators with artificial constituent outreach, help to automate corporate lobbying, or even generate laws in a way tailored to benefit narrow interests.

But there are reasons to feel more sanguine as well. Many groups have started demonstrating the potential beneficial uses of AI for governance. A key constructive-use case for AI in democratic processes is to serve as discussion moderator and consensus builder.

To help democracy scale better in the face of growing, increasingly interconnected populations – as well as the wide availability of AI language tools that can generate reams of text at the click of a button – the US will need to leverage AI’s capability to rapidly digest, interpret and summarize this content.

An old problem

There are two different ways to approach the use of generative AI to improve civic participation and governance. Each is likely to lead to drastically different experiences for public policy advocates and other people trying to have their voice heard in a future system where AI chatbots are both the dominant readers and writers of public comment.

For example, consider individual letters to a representative, or comments as part of a regulatory rulemaking process. In both cases, we the people are telling the government what we think and want.

For more than half a century, agencies have been using human power to read through all the comments received, and to generate summaries and responses of their major themes. To be sure, digital technology has helped.

In 2021, the Council of Federal Chief Data Officers recommended modernizing the comment review process by implementing natural language processing tools for removing duplicates and clustering similar comments in processes governmentwide. These tools are simplistic by the standards of 2023 AI.

They work by assessing the semantic similarity of comments based on metrics like word frequency (How often did you say “personhood”?) and clustering similar comments and giving reviewers a sense of what topic they relate to.

Getting the gist

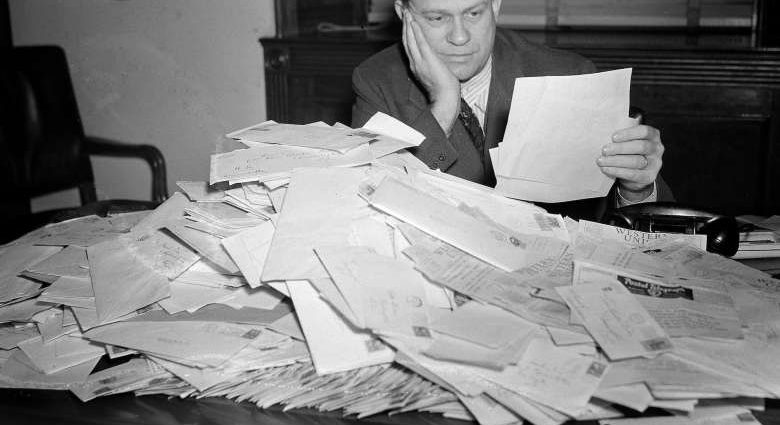

Think of this approach as collapsing public opinion. They take a big, hairy mass of comments from thousands of people and condense them into a tidy set of essential reading that generally suffices to represent the broad themes of community feedback.

This is far easier for a small agency staff or legislative office to handle than it would be for staffers to actually read through that many individual perspectives.

But what’s lost in this collapsing is individuality, personality and relationships. The reviewer of the condensed comments may miss the personal circumstances that led so many commenters to write in with a common point of view, and may overlook the arguments and anecdotes that might be the most persuasive content of the testimony.

Most importantly, the reviewers may miss out on the opportunity to recognize committed and knowledgeable advocates, whether interest groups or individuals, who could have long-term, productive relationships with the agency.

These drawbacks have real ramifications for the potential efficacy of those thousands of individual messages, undermining what all those people were doing it for. Still, practicality tips the balance toward of some kind of summarization approach. A passionate letter of advocacy doesn’t hold any value if regulators or legislators simply don’t have time to read it.

Finding the signals and the noise

There is another approach. In addition to collapsing testimony through summarization, government staff can use modern AI techniques to explode it. They can automatically recover and recognize a distinctive argument from one piece of testimony that does not exist in the thousands of other testimonies received.

They can discover the kinds of constituent stories and experiences that legislators love to repeat at hearings, town halls and campaign events. This approach can sustain the potential impact of individual public comment to shape legislation even as the volumes of testimony may rise exponentially.

In computing, there is a rich history of that type of automation task in what is called outlier detection.

Traditional methods generally involve finding a simple model that explains most of the data in question, like a set of topics that well describe the vast majority of submitted comments. But then they go a step further by isolating those data points that fall outside the mold — comments that don’t use arguments that fit into the neat little clusters.

State-of-the-art AI language models aren’t necessary for identifying outliers in text document data sets, but using them could bring a greater degree of sophistication and flexibility to this procedure. AI language models can be tasked to identify novel perspectives within a large body of text through prompting alone. You simply need to tell the AI to find them.

In the absence of that ability to extract distinctive comments, lawmakers and regulators have no choice but to prioritize on other factors. If there is nothing better, “who donated the most to our campaign” or “which company employs the most of my former staffers” become reasonable metrics for prioritizing public comments. AI can help elected representatives do much better.

If Americans want AI to help revitalize the country’s ailing democracy, they need to think about how to align the incentives of elected leaders with those of individuals. Right now, as much as 90% of constituent communications are mass emails organized by advocacy groups, and they go largely ignored by staffers.

People are channeling their passions into a vast digital warehouses where algorithms box up their expressions so they don’t have to be read. As a result, the incentive for citizens and advocacy groups is to fill that box up to the brim, so someone will notice it is overflowing.

A talented, knowledgeable, engaged citizen should be able to articulate their ideas and share their personal experiences and distinctive points of view in a way that they can be both included with everyone else’s comments where they contribute to summarization and recognized individually among the other comments.

An effective comment summarization process would extricate those unique points of view from the pile and put them into lawmakers’ hands.

Bruce Schneier is Adjunct Lecturer in Public Policy, Harvard Kennedy School and Nathan Sanders is Affiliate, Berkman Klein Center for Internet & Society, Harvard University

This article is republished from The Conversation under a Creative Commons license. Read the original article.