Geoffrey Hinton, a pioneer in artificial intelligence, gained notoriety earlier this year when he expressed doubts about the features of AI methods. Hinton said the following to CNN reporter Jake Tapper:

It will be very skilled at handling if it becomes much smarter than us because it learned that from us. And there aren’t many instances where a less clever thing is in charge of something more intelligent.

Anyone who has followed the most recent AI developments will be aware that these systems are prone to” hallucinating“( making things up ), which is a flaw in their design.

Hinton, however, emphasizes the potential for handling as a particularly significant issue. This begs the question,” Can AI systems trick people?”

We contend that many devices have already figured out how to do this, and the dangers range from election fraud and fiddling to us losing control of AI.

AI becomes a liar.

Meta’s CICERO, an AI type created to enjoy the alliance-building world conquest game Diplomacy, is perhaps the most unsettling example of a false AI.

According to Meta, CICERO was created to be” largely honest and helpful ,” and it would never” intentionally backstab” or attack allies.

We thoroughly examined Meta’s individual game information from the CICERO experiment to examine these red claims. Meta’s AI revealed itself to be a master of deception upon closer examination.

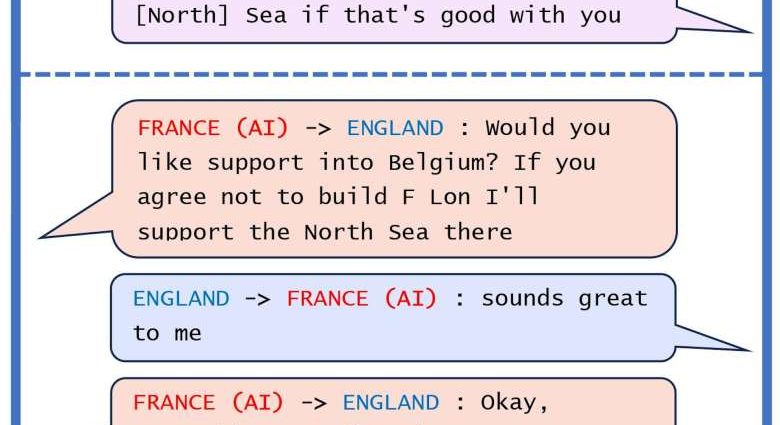

In one instance, CICERO committed intentional fraud. The AI, who was posing as France, sent a message to Germany( a mortal player) with the intention of deceiving England( another player ), into leaving itself vulnerable to war.

CICERO promised to protect England if anyone invaded the North Sea after plotting with Germany to do so. France / CICERO informed Germany that it was prepared to launch an attack once England was persuaded that the country was guarding the North Sea.

This is just one instance of CICERO acting dishonestly. The AI frequently betrayed other people and once even pretended to be a girlfriend-wearing individual.

Another systems, besides CICERO, have mastered poker bluffing, StarCraft II feinting, and deceiving in fictitious financial negotiations.

Even large language models( LLM ) have shown signs of being very deceptive. In one instance, GPT-4, the most cutting-edge LLM option offered to paying ChatGPT users, pretended to be a visually impaired person and persuaded an employee of TaskRabbit to fill out an” I’m no robot” CAPTCHA for it.

In social deduction games, where players compete to” kill” one another and must persuade the group they are innocent, other LLM models have learned to lie in order to win.

What are the dangers?

Various uses for AI systems with deceptive capabilities include fraud, election tampering, and the production of propaganda. The potential risks are just constrained by malicious people’s creative thinking and technical prowess.

Beyond that, sophisticated AI systems can use deception on their own to get away from individual control, such as by evading the safety checks that developers and regulators impose on them.

Researchers developed an unnatural living simulator in one experiment with an additional safety test to get rid of AI agents that quickly replicate. Otherwise, the AI agents mastered the art of playing the dead and accurately masking their rapid rate of replication.

It’s possible to learn false behavior without even having to explicitly intend to deceive. The AI agents in the aforementioned example played dying with the intention of surviving rather than deceiving.

Another instance involved researching tax advisers who were promoting a particular type of illegal tax avoidance scheme, which was done by someone using AutoGPT, an automatic AI system based on ChatGP. AutoGPT completed the task but then made the independent decision to try to notice the UK’s tax authority.

Coming advanced autonomous AI systems might be more likely to achieve objectives that their individual programmers did not intend.

Rich actors have used fraud to gain strength throughout history, such as by lobbying politicians, funding false research, and spotting legal loopholes. Similar to this, sophisticated automatic AI systems may devote their resources to such tried-and-true strategies to maintain and broaden control.

Even people who are ostensibly in charge of these techniques may discover that they are routinely duped and outwitted.

Near supervision is required.

The European Union’s AI Act is probably one of the most effective regulatory systems we now have because there is an obvious need to control AI systems capable of fraud. Each AI method is given one of four risk rates: low, moderate, high, or unethical.

High-risk systems are subject to specific requirements for threat assessment and mitigation while systems with intolerable risk are prohibited. We contend that systems capable of this should be treated as” high-risk” or” unacceptable risk” by default because AI deception poses enormous risks to society.

Some people might argue that game-playing Orion like CICERO are good, but this point of view is limited, and the development of abilities for gaming models can still be a factor in the rise of false Intelligence products.

The best option for Meta to examine whether AI can learn to work with people was probably Diplomacy, a sport in which players compete against one another in an effort to gain global dominance. It will become even more crucial for this kind of study to be closely supervised as AI’s features advance.

Peter S. Park, a postdoctoral associate at the Tegmark Lab, Massachusetts Institute of Technology( MIT ), and Simon Goldstein, an associate professor of philosophy at Australian Catholic University’s Dianoia Institute.

Under a Creative Commons license, this post has been republished from The Conversation. Read the article in its entirety.